For Millennials. By Millennials.

Netflix’s recent documentary ‘Coded Bias’ has shed some light on another aspect of this new data-driven world – racism. It further explores the threat to civil liberties that a world dominated by racist AI algorithms possess. And just like the past few hundred years, Black people might be the ones who will predominantly get the bad end of this deal.

Coded Bias: How racist is AI?

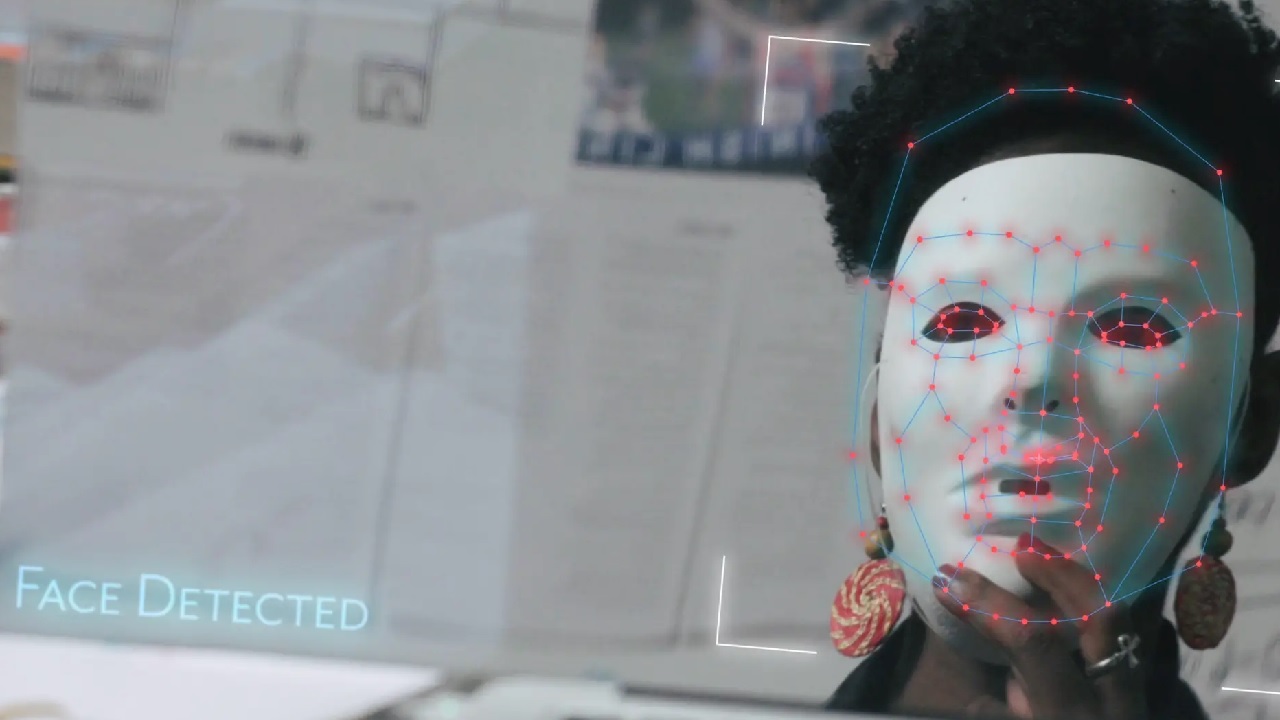

The journey for Coded Bias began after MIT Media Lab researcher Joy Buolamwini discovered that most facial recognition software do not recognize dark-skinned faces or women with accuracy. As a result, she embarks on an investigation that reveals widespread racial bias within the algorithms that everyone uses on a daily basis.

With each passing day, technology creeps further into our lives. And most people surrender their privacy in return for the ease that tech and AI provide to them. But, this automated world seems to have a racist bias within itself. And to hand over our world to racist AI is a threat too large for vulnerable minorities to ignore. Directed by award-winning filmmaker Shalini Kantayya, we follow Buolamwini in Coded Bias in her fight to expose racial bias and discrimination in facial recognition algorithms and AI. Moreover, she is accompanied by several data scientists, watchdog groups, and mathematicians in her endeavors. What they discover is that racist AI software and algorithms are prevalent all around the world.

Coded Bias explains how artificial intelligence has become almost a fundamental part in all our lives. This includes hiring processes, determining recipients of health insurance, the length of prison terms, and so much more. The show then further explores how police in the UK are using facial recognition technology, and in the case of China, the city of Hangzhou is quickly becoming a model for city-wide surveillance. Coded Bias explores how AI is affecting the individuals subjected to these disruptive technologies. Moreover, director Kantayya also interviewed data scientists, ethicists, and mathematicians who are striving hard against biased and racist AI. After all, this has had a negative impact on civil rights and democracy, calling for greater accountability.

How can a machine be racist?

The sole reason why governments and companies are quick in replacing humans with machines is because of perceived “machine neutrality”. Throughout history, humans have exhibited sexist or racial biases. Some of it is conscious, while much of it is unconscious. And so, to make sure that this bias doesn’t creep into our systems anymore, society decided to automate most of its processes. But how did our machines turn racist? How did we end up with racist machines and racist artificial intelligence?

Coded Bias explored that machine learning, which is a form of artificial intelligence, uses tons of data as input to replicate that data as its output and predict future models. It is the major way that tech companies are using past data to predict the future and target their specific audiences for better marketing. Similarly, machine learning is also important in developing surveillance models. But, even when the programmers themselves are not racist, why do we see racist algorithms? It’s because those algorithms simply mimic the real-world data that is fed in them. And that data belongs to a world with inherent racial and gendered bias. These algorithms are simply unable to look past that bias and they continue to recreate the biases in the data. As a result, we see sexist and racist discrimination in their output.

The effects of racist AI

The implications of these are far-reaching. For instance, Amazon once adopted a machine learning algorithm for hiring. But, the AI simply rejected all the resumes belonging to women. This was because the Amazon workplace was male-dominated. And the AI simply mimicked that past data and not only continued the sexist approach of Amazon when it came to hiring but made it worse.

Similarly, Coded Bias revealed that there are other instances where machine learning is adopted, even when i. CCTV and other surveillance cameras note down citizens’ facial biometric data without their consent in the UK. But, those models are more than 98% inaccurate in identifying wanted suspects. Yet, these models continue to be adopted without any regulations, transparency, or accountability.

This also brings us to how big tech, like Facebook, in particular, is able to influence real-life decisions with small updates. For instance, by showing how many of their friends went to vote on election day, Facebook was able to increase voter turnout in a US election by over 300,000 votes. In contrast, the 2016 election was decided by a mere 100,000 votes. Facebook can literally change the outcome of elections with a seemingly small change. Yet, it goes unregulated by politicians, who in many cases are too old to understand the impact of technology in this day and age.

These are the important issues that Coded Bias highlights in a data and tech-driven world. Make sure you catch it on Netflix here.

Related: Officer in charge of Atlanta Hate Crime was Racist!